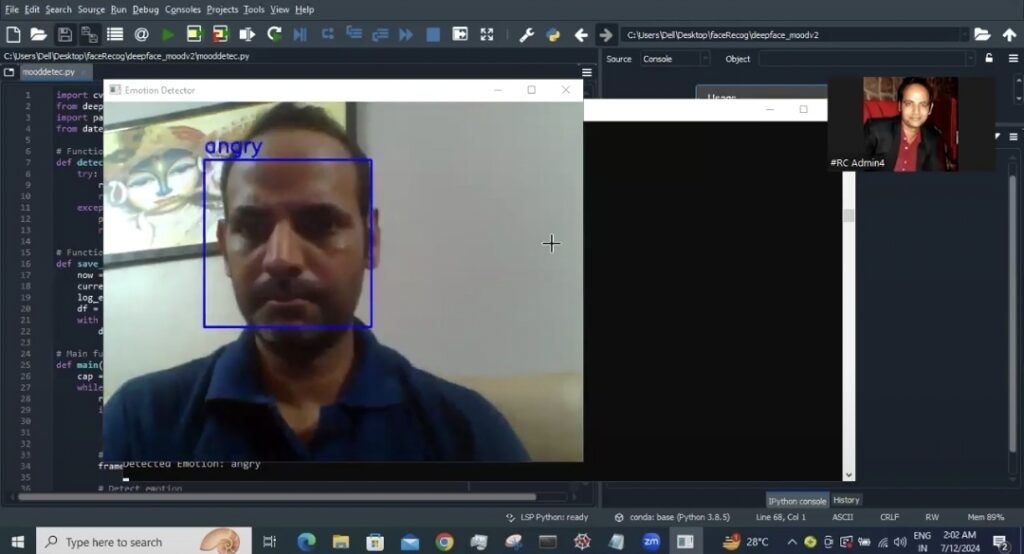

01. Facial Expression Tracker

This demo estimates a user’s observable affect from a camera feed by detecting a face and classifying facial expressions (e.g., happy/neutral/angry). Technically, it is computer vision & machine-learning classification—

It measures facial-expression signals, not genuine happiness.

Critical limits: Facial expression ≠ inner happiness (people can smile without being happy, and vice-versa).

Accuracy depends on lighting, camera angle, face visibility, and demographic bias in training data.